Google may have accidentally leaked SEO secrets, and experts Rand Fishkin & Mike King have revealed key findings. We share the top 5 takeaways for marketers to maximise their website's ranking and SEO performance.

Last week, two prominent voices in the SEO industry - Rand Fishkin of SparkToro/Moz and Mike King of iPullRank began covering a large leak of API documentation called GoogleApiContentWarehouseV1. This was believed to be mistakenly uploaded to GitHub by Google, where it was obtained by a non-Googler and shared with Rand. Google has since confirmed this leak to be authentic but notes the data is incomplete and some of it is outdated, as it is a small part of the overall system.

It’s believed Google mistakenly uploaded this to GitHub, where it was obtained by a non-Googler and shared with Rand. Google has since confirmed this leak to be authentic but notes that the data is incomplete and some is outdated, as it is a small part of the overall system.

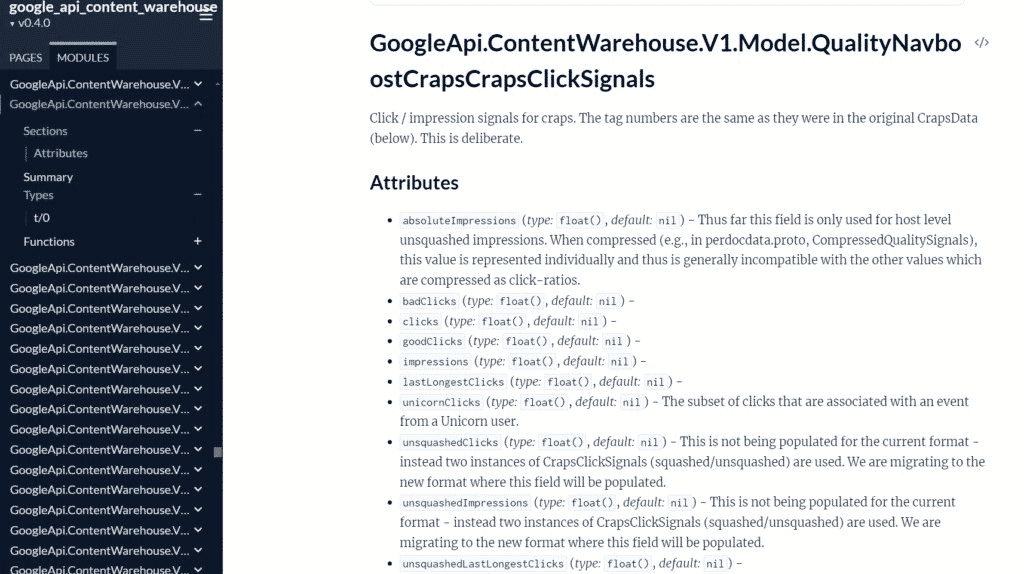

The packet was full of API documentation like the below and was uploaded in early March 2024. Rand & Mike believed it to be current - it has mentions of LLMs, but not AI Overviews. The documents have since been removed.

After obtaining the documents, Rand and Mike analysed some of the data and last week shared their early findings, cross-referencing API findings with patents and statements from Google Search Analysts like Matt Cutts, John Mueller, and Gary Illyes.

As Rand and Mike have noted, there is no factor scale included in the leaked documentation. The leak is a list of attributes that Google collects from websites for use in ranking search results - SEOs call these "ranking factors." Google engineers would use this API in combination with other systems to run queries and build new systems or tests, as they are all seemingly linked in what Mike describes as a monolithic repository - a shared environment where all code and systems are connected. However, the presence of attributes does not validate they are current ranking factors. There are notes that black and white statements by Googlers, such as "we do not use user click data," are clearly untrue.

This is an evolving story, and Rand & Mike have posted their initial assessments over the past week. No doubt, more will come from them and others as the documentation is more thoroughly reviewed by SEOs worldwide.

You can go right to the source and read Rand’s post and Mike’s post (and his follow-up on Search Engine Land). These guys are very thorough, and they’re reviewing API documentation, so the content is technical and a bit dense.

I’ve written this for marketing generalists and managers who know SEO is important and could use some direction and context.

I’ve picked the top 5 findings from the Google leak, provided high-level context, summarised findings in the leak and highlighted learnings to take forward that validate the SEO tactics you should be focused on to maximise your SEO performance.

- Click Data from User Searches - the most important signals for ranking

- Site Signals are important for SEO

- Links are important for site's authority and traffic

- What are Twiddlers?

- Being an Expert Entity is important for high-risk industries

If you’d like the TLDR version, you can skip to the end here.

Top 5 SEO Findings from the Google Leak

Click Data from User Searches is among the most important Signals for Ranking

Context

It has long been suspected that Google uses click data from Chrome and Android to reorder search results.

Ten years ago, Rand Fishkin ran an experiment by asking his Twitter users to click on the #1 result, go back to the search results, click #7 and stay active on the site. This moved positions from the bottom of page 1 to #1. He repeated it a number of times with similar results.

Findings

The Google DOJ inquiry highlighted a ranking system called NavBoost, which took into account clickstream data (huge amounts of click data from Google plugins, Chrome, and Android browsing data). Factors like GoodClicks and BadClicks are found within the leaked API documentation, suggesting, as many thought, that Google is using signals like short clicks (bounces) and pogo-sticking (going back and forth between search results until you are satisfied) to ascertain user satisfaction and may reorder search results depending on the signals it receives.

The DOJ testimony goes on to say that while NavBoost brought search results together, another system called Glue does everything else (images, maps, YouTube) and pulls it into the search result pages we know, ranked by where users click.

Learning

SEO has been focused on what it takes to rank and get the click, but what happens after the click may matter even more.

Ensuring your content answers the query, loads quickly, has good UX/UI functionality and gets to the solution hopefully keeps users on your website and leads to deeper engagement with your content.

Google is paying attention to the engagement on your page vs others and may rank your content better if it gets good signals.

There’s something to be said here about brand and reputation; if your brand is well-recognised and trusted, even if it's not #1, you may get more clicks and, in turn, rank higher if you've invested in a good onsite experience.

Site Signals are important for SEO

Context

Google has said multiple times that there is no domain authority metric, something that Rand's company Moz became famous for with its Domain Authority (DA) score, which became a way for SEOs and website owners to measure their strength once Google's PageRank toolbar was retired.

Findings

A Site Authority metric was found in the leaked API documentation. It was also noted that a homepage's authority impacts the whole site's authority.

Surprisingly, page title structure benefits the whole site, but it is good to know we are on the right path to optimising all title tags.

Exact keywords in links are important. Link using the exact phrase you want that page to rank for (ensure the anchor text contains your target keyword).

Learnings

It's nice to see the value of the SEO fundamentals validated. Efforts spent uncovering the right keywords, putting them into page titles and headings, and using them prominently in internal linking with keywords help rank those pages, but pleasingly optimising parts can have a macro impact on the site overall, go figure!

Links are important - quality may be determined by the referring site's authority and traffic

Context

We have known links are important for a long time. Google has stated that links to your website and the helpfulness of your content are the key ingredients for improved rankings, visibility and organic traffic.

Findings

Interestingly, the documentation notes a relationship between link quality and traffic to the site. Here's the clause verbatim from the leaked documentation:

"Google has three buckets/tiers for classifying their link indexes (low, medium, high quality). Click data is used to determine which link graph index tier a document belongs to."

There are also measures to identify if you are building links too quickly, and penalties are handed out algorithmically. So that's a no to link-building services.

Learnings

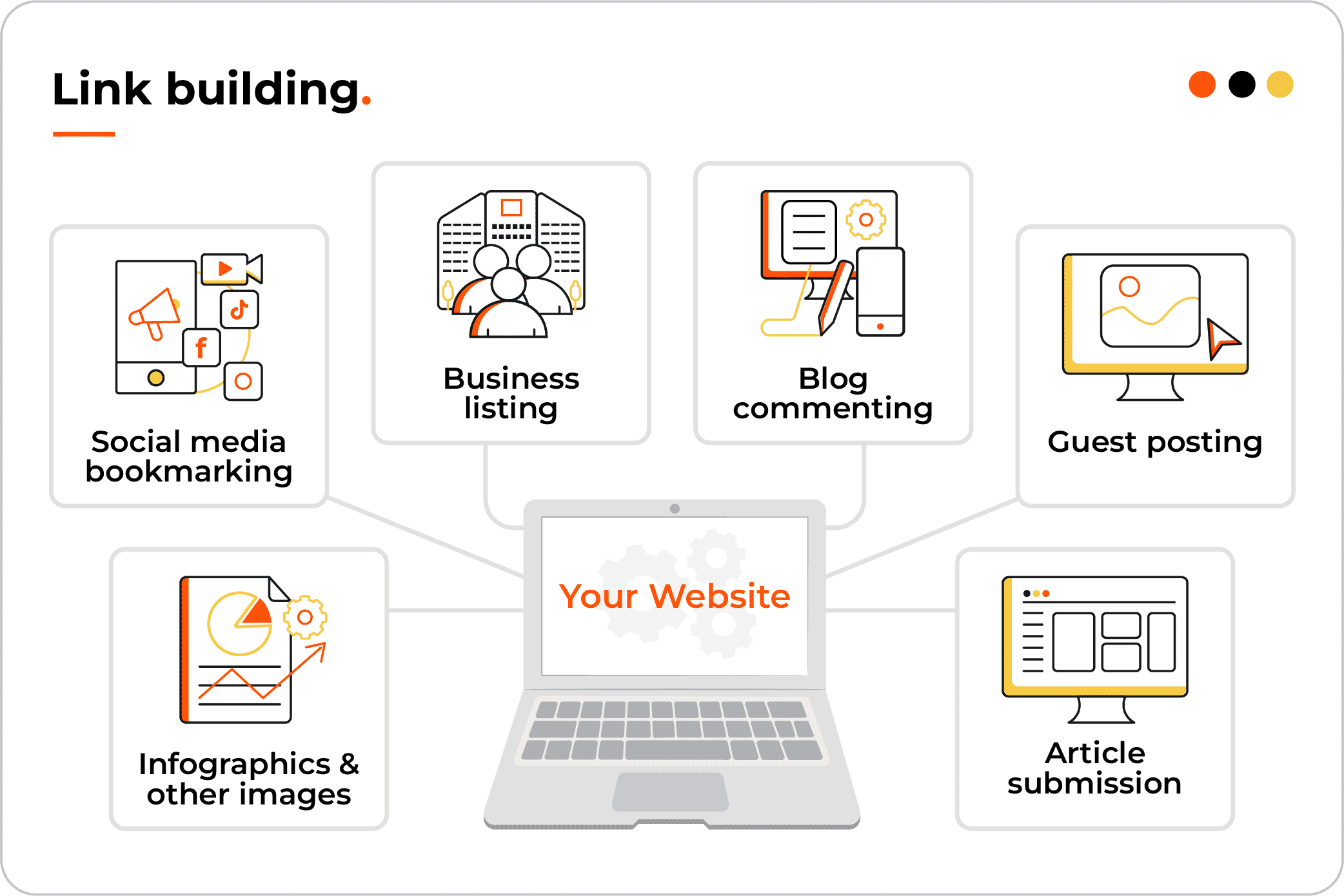

Link building blew up many years ago when the PageRank toolbar and MOZ’s Domain Authority handed the SEO community a scoring system for site quality and spurred the industry towards buying links as a practice, which Google spent years cleaning up with periodic link penalty algorithms called Penguin.

Google says don't buy links; make content that makes people want to link to you. Building links does work, but building links on sites no one will click or visit will, at best, be ignored and, at worst, get you penalised.

So you should aim to create content that will get picked up by relevant websites who will link to you. There have been practices of content collaborations - beauty brands work with fashion publishers, news sites publish banking research & whitepapers, deal sites post about eCommerce deals as long as you're not Forbes Coupons (site authority abuse*).

Websites get links and mentions for many reasons, but if those links get a decent amount of clicks coming from them (referral traffic), they will count more.

This is a good time to talk about Twiddlers.

What are Twiddlers?

Context

We've never heard of Twiddlers before. Searches on Google turn up a little before the leak.

Findings

Twiddlers are mentioned in the documentation as systems that come after the ranking system and allow it to re-rank the results based on the signals. This is what NavBoost and Glue do alongside many other systems, like QualityBoost, RealtimeBoost and, most notably, the Panda algorithm, which made waves across the SEO world over the last decade as Google strove to clean up low quality content and spam.

Mike found further documentation on Panda and tied this to references he's made in the past from Google patents, postulating Panda to be largely about building a scoring modifier based on distributed signals related to user behaviour and external links.

Effectively, once a query or topic was on Panda’s watch list, each periodic refresh would look for improved, diverse link signals to ranking pages. It would then scan for good clicks and engaged user behaviour observed by NavBoost. If the score turned up positive, rankings would improve, if not they’d decline.

Learnings

To give good signals to NavBoost, Panda (and possibly Helpful Content Updates):

- Focus on delivering a good user experience that keeps users engaged and leaning into other areas of your site

- Ensure your content remains up-to-date and look for natural paths to expand upon topics (such as guides and buying journeys)

- Find ways to capture the attention of influential people within your industry as a way to earn links and mentions to your content.

The existence of twiddlers and the focus on measuring user behaviour means SEO's job keeps going after the click, through the analysis of behaviour to determine if it was a good click or a bad click and working collaboratively with the business to ensure the bad becomes good and the good becomes great. If you don't pay attention after the click, you may lose to competitors who are truly customer-obsessed.

Being an Expert Entity is Important for High-Risk Industries

Context

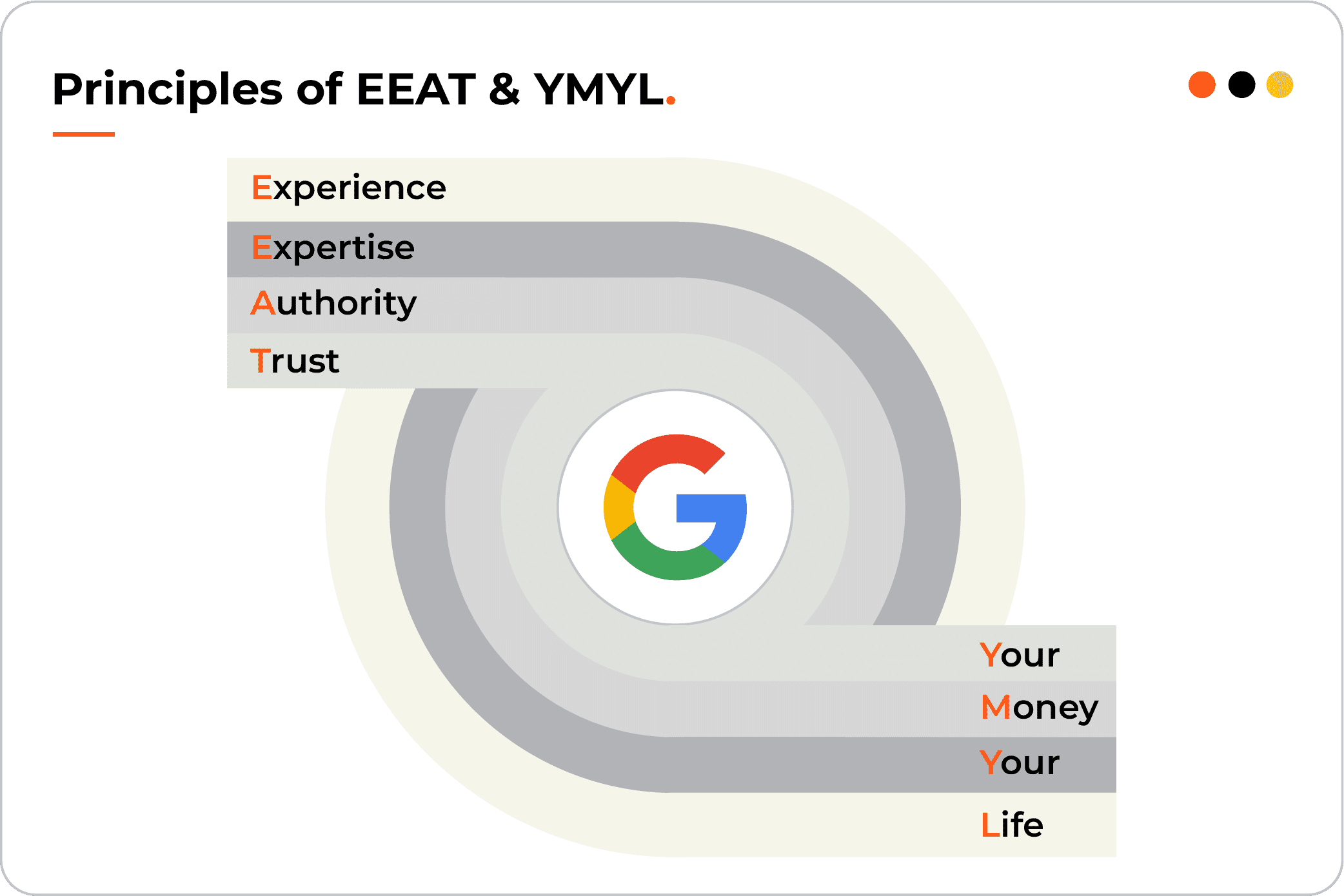

You may have heard about the principles of Experience, Expertise, Authority, and Trust (E-E-A-T) and Your Money Your Life (YMYL) put forward by Google.

E-E-A-T has been noted as particularly important for queries that could put a person's well-being (health, finance, safety) at risk if they obtained the wrong information from an untrustworthy source.

Google does a lot of work with its quality raters and systems to identify if a query is a YMYL and the more strongly a topic is YMYL (think mental health), the greater the E-E-A-T requirements for websites that want to rank for that query.

You can read the Google E-E-A-T guidelines here.

Findings

Authorship is noted as a value, so it'scontent authors are likely recognised and included in Google's entity graph.

The documentation has a number of metrics for YMYL, likely measuring a query's YMYL on a scale of high to low. There's also a system to predict whether a never-before-seen query should be classified as YMYL.

It was also noted that Google are vectorising content to identify how different one piece of content is from the overall website’s topical affinity - i.e. Are you talking outside your lane and expecting to rank for another topic? Does this need to be addressed (site authority abuse)? There is a mention of Gold Standard documents, so research and white-papers are worthwhile.

There is also a score for content effort, where a LLM (Large Language Model like Gemini/ChatGPT/Perplexity) estimates the effort for article pages, likely to measure GenAI usage for content creation.

Learnings

We know Google has invested significantly in building its entity graph since the advent of semantic search. For this reason, it’s in your best interest to identify the people within your business who are the experts and have them be your brand ambassador on the website to strengthen your expertise and authority in Google's eyes.

If you don’t have one - you should find one and align them to your brand, having them author content on your website and share it with their audience over time to tie strings between them and your brand (think Kochie and Allianz).

Take on the user experience feedback noted earlier, bring out your ambassador’s Expertise, you’ll get mentions and links (signals of Authority?), add some Trust signals with customer reviews, and you’ll be on your way to being an E-E-A-T evangelist.

What if your industry isn't covered by YMYL, like B2C retail? Should you still do E-E-A-T? Just because you're not covered now doesn't mean Google has no plans to expand. That said, a good user experience, highlighting the expert figures within your business, and showing customer reviews to demonstrate trust will, in the end, make your brand more successful, so they're worth doing.

Summary

There were a few surprises, but we already knew and suspected many things. However, it is nice to have validation that:

- You can't go past the basics - keyword research, targeting in page titles, and strategic internal linking are crucial to success and very within your control.

- User experience is important - we can't skimp on it, and we need to focus as much on what happens after the click as we do in securing the click. Look carefully at where in our site and journey we may be losing people and try to turn bad clicks to good clicks by improving satisfaction, utility and usefulness.

- Link quality is important - we need to find ways to get the best ones that will also be a source of referral traffic (and that's with great content people will want to link to, not paying $100 for a link no one will see).

- We need our business and industry experts to be the champions of the brand and the authors behind our YMYL content.

If this struck a chord with you, and you need a partner to help you evolve your search approach, get in touch with our team of SEO evolutionists.

*site authority abuse = using your strength to rank for things outside your topical bounds